As a note, it’s been a while since I worked with this stuff, and I am a bit rusty, but I will answer the questions to the best of my knowledge.

The equation for f(t) is the impulse response function of a first-order low pass filter. I couldn’t remember the exact derivation, but thankfully, wikipedia has an entry here (scroll down to “impulse response”).

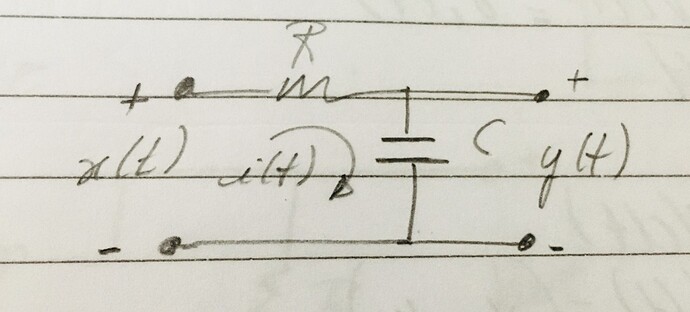

So… the network you linked (tutorial 12) in your post only works for differential equations of the form \frac{dy}{dt} = Ax(t) + By(t). However, since the differential equation of the first order high pass filter is of a slightly different form, the network from tutorial 12 has to be modified to implement the high pass filter.

In order to understand how to modify the network, we’ll need to know how to transform an arbitrary LTI system into a network with neural populations. This is covered in section 8.1 of the Neural Engineering book (by Chris Eliasmith & Charles Anderson). I’ve compiled the relevant pages here for you to reference.

NEF-sec8.1.pdf (2.3 MB)

If you read the section of text, you will see that converting an LTI system (with the A, B, C and D state-space matrices) into an equivalent system using a neural synapse (note that the derivation assumes the neural synapse is an exponential synapse - i.e., a low pass filter), you’d need to modify the A and B matrices such that:

\mathbf{A}' = \tau \mathbf{A} + \mathbf{I} \\

\mathbf{B}' = \tau \mathbf{B}

You can perform the same math for the C and D matrices, but if you don’t use any neural synapses on those connections, those remain unchanged.

If you go back to the tutorial code, this change is what is meant by the comment:

# While the proof is outside the scope of this tutorial, the resulting rule

# is that both your Connections need to get scaled by the synapse value, and

# your recurrent Connection must also add the stored value back in. That is,

# in this case, the input part of the equation becomes

# (x/tau) * synapse

# and the recurrent part becomes

# (-y/tau) * synapse + y

With these changes, a general LTI system can thus be implemented in a neural network as such.

with nengo.Network() as model:

# Some input

u = nengo.Node(lambda t: np.sin(t * tau * 0.5))

# Population representing x

x = nengo.Node(lambda t, x: x, size_in=1)

# The output

y = nengo.Node(size_in=1)

# Connections implementing A, B, C and D matrices. Note that the A and B matrices

# have been modified because their connections include a neural synapse, whereas

# C and D remain unmodified because their synapse are None. If neural synapses are

# required for the C and D connections, the derivations for an IDEAL system become

# a lot more complex. But, for a slightly non-ideal system, the neural synapse

# can be used in the C and D connections without any change to C and D.

nengo.Connection(x, x, synapse=synapse, transform=synapse * A + 1)

nengo.Connection(u, x, synapse=synapse, transform=synapse * B)

nengo.Connection(x, y, synapse=None, transform=C)

nengo.Connection(u, y, synapse=None, transform=D)

pin = nengo.Probe(u)

pout = nengo.Probe(y)

A few notes about the network above:

- The

x population has been replaced by a nengo.Node for simplicity of the plots (there’s no spike noise), but it can be replaced by a nengo.Ensemble without difficulty.

- The C and D connections have a neural synapse of

None. For a “biologically-realistic” network, this isn’t really feasible (the synapse has to be some value in biology). Implementing an ideal LTI system with non-0 synapses for C and D make the network a lot more complicated, but the LTI system can be approximated quite well simply by using a neural synapse there (as long as it’s a small one) without changing C nor D.

- The connections are hard-coded for a single-dimension signal, you’ll need to modify the

x to x connection if your signal is multi-dimensional.

- Since the matrices are static, the connections are implemented using

transform rather than function. As an example, the following two code snippets are mathematically identical (note that the transform method simulates faster since Nengo can optimize it with Numpy operations rather than having to call the Python function at every timestep):

def conn_func(x):

return x * 2

nengo.Connection(ens1, ens2, function=conn_func)

and

nengo.Connection(ens1, ens2, transform=2)

But what values do you use for the A, B, C and D (state-space) matrices? I’d have to admit I cheated a bit here. Instead of going through the derivations, I simply used the built in Nengo function to do it (see also the scipy documentation).

First order low pass filter

The transfer function for a first-order low pass filter (see “Transfer functions”) is

H{s} = \frac{1}{1 + \tau s}

So, using the num=[1] and den=[tau, 1], the tf2ss function returns:

A = -1 / tau

B = 1

C = 1 / tau

D = 0

using those values, we can plug it into the network above and get the desired filtered output.

Note that tutorial 12’s network is similar to the network above, except that the state-space matrices are:

A = -1 / tau

B = 1 / tau

C = 1

D = 0

which turn out to be identical in both cases. Also note that in tutorial 12, the x ensemble actually represents u from the LTI box diagram (since input_function is the \mathbf{B'} matrix), and the y ensemble represents x (since recurrent_function is the \mathbf{A'} matrix). Sorry for the confusion! Additionally, since the y ensemble represents x, the C matrix is implicitly 1.

First order high pass filter

The transfer function for a first-order high pass filter can be found here and is defined as such:

H(s) = \frac{\tau s}{1 + \tau s}

In this case, the parameters to the tf2ss functions are: num=[tau, 0] and den=[tau, 1], and the function produces:

A = -1 / tau

B = 1

C = -1 / tau

D = 1

Once again, if you plug these values into the network above, you should see that the desired filtering behaviour is achieved.

As a side note, the following state-space matrices also implement the high pass filter:

A = -1 / tau

B = -1 / tau

C = 1

D = 1

so, tutorial 12 could be modified as such to implement it:

with model:

stim_x = nengo.Node(0)

x = nengo.Ensemble(n_neurons=50, dimensions=1)

nengo.Connection(stim_x, x)

y = nengo.Ensemble(n_neurons=50, dimensions=1)

tau = 0.5

synapse = 0.1

def input_function(x):

# Note the change from x/tau to -x/tau

return -x / tau * synapse

def recurrent_function(y):

# Same as before

return (-y / tau) * synapse + y

nengo.Connection(x, y, synapse=synapse, function=input_function)

nengo.Connection(y, y, synapse=synapse, function=recurrent_function)

# Need connections for the C and D matrices

out = nengo.Node(size_in=1)

nengo.Connection(y, out, synapse=None) # C = 1

nengo.Connection(x, out, synapse=None) # D = 1

In the modified tutorial 12 code, you’ll notice that since the D matrix is applied to the result of \mathbf{C}x(t), we need to explicitly create an output Node to represent this value, since the y population only represents x(t) (not y(t)).

High pass filter synapse

With all of this information (and as I alluded to before), you can implement the high pass filter using the nengo.Synapse class. From the derivation above, we know the num and den values, so a high pass filter synapse can be defined as such:

from nengo.params import NumberParam

from nengo.utils.filter_design import tf2ss

from nengo.synapses import LinearFilter

class Highpass(LinearFilter):

tau = NumberParam("tau", low=0)

def __init__(self, tau, **kwargs):

super().__init__([tau, 0], [tau, 1], **kwargs)

self.tau = tau

You can create an instance of this filter class and use the .filt method to apply the filter to any signal:

hpf = Highpass(tau)

hpf.filt(my_signal)