- I found some example of nengo_dl. When nengo_dl training, it have sim.compile. SNN don’t have optimizer, why in nengo use optimizer? that is convert CNN to SNN?.

- Why SNN have Conv layer? that is convert CNN to SNN?.

The way NengoDL uses tensorflow to train a spiking neural network is as such:

- A network is created in Nengo, or TensorFlow and passed to NengoDL.

- NengoDL calls on the TensorFlow functions to compile and train the network. This training is done using the rate-mode equivalents of the neurons in the original network.

- Once the training is done, the network is converted to a spiking neural network using NengoDL’s converter (see example here). The converter preserves the network weights that were trained, but swaps out the rate-mode neurons with spiking ones.

Because the training is done using rate neurons, and using the TensorFlow architecture, NengoDL allows you to specify optimizers to use to train the network. Note that NengoDL’s sim.compile, and sim.fit functions are just wrappers of the corresponding TensorFlow functions.

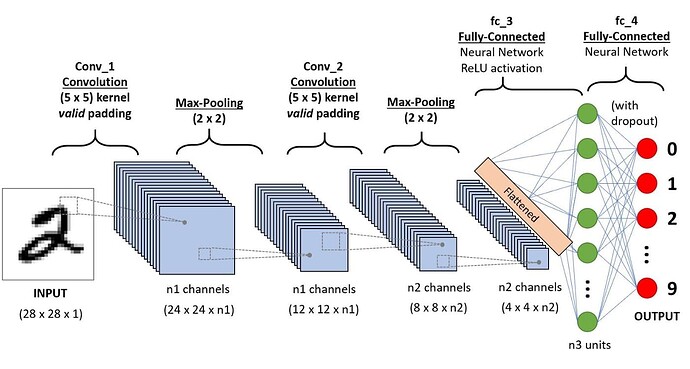

No… The network has convolution layers because, as the name implies, it is a convolutional neural network (CNN). The term “CNN” merely describes the network architecture, and does not place an restrictions on what type of neuron (spiking or not) the network uses. Such networks contain convolution layers to perform computations on the inputs to each layer. Since a convolution is a linear transformation, the convolution can be represented within the connection weights between each layer. This means that convolution transforms can be used in spiking neural networks without much change.

tks for you answer.

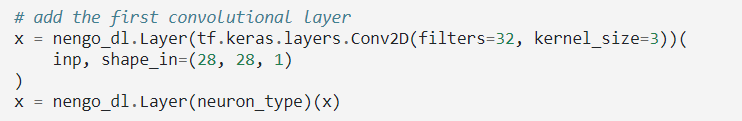

- I don’t inderstand a bit about Conv layer. for example, Conv layer in CNN be represented like:

You can give me example for representing Conv by image or Code? Tks you

This isn’t strictly a Nengo question, so I’ll only briefly discuss it here. If you want more information on how CNNs work, and how the convolution itself is performed, you can find much more informative guides through Google (or your favorite search engine). As an example, here’s a video explaining the basics of how a CNN works.

So, each convolution “layer” simply performs the convolution operation on an input image, or a 2D matrix. The convolution operation itself is a matrix operation, as described in this video. The process of doing the multiplications (between each cell of the kernel and the image), and the subsequent summation is a matrix multiplication. The convolution as a whole is a repeated (tiled) set of matrix multiplications targeting different areas of the input 2D image.

The code for the convolution transform is located here in the Nengo codebase. Nengo uses the ConvInc operator to compute convolutions, which in turn call conv2d function which does the actual convolution matrix operation.