Hi,

I am using l2 regularization to adjust the firing rates according to the the targetted firing rate using the instructions given in the the link nengo regularization. However, I do not want to use the nengo converter due to its non-compatibility with some of my research work. I wrote the following code for training the SNN using regularization in SNN and then checked the maximum firing rate according to some nengo tutorial. Unfortunately, the maximum firing rate in my code is always 1000 and it never falls below this rate inspite of regularization and target rate set as 250. It would be great if someone could give the suggestions.

import matplotlib.pyplot as plt

import nengo

import nengo_dl

import numpy as np

import tensorflow as tf

n_steps = 10

target_rate = 250

max_rate = 46

# amp = 1.0/max_rate

# target_rate = max_rate*amp

(train_images, train_labels), (

test_images,

test_labels,

) = tf.keras.datasets.mnist.load_data()

train_images = train_images.reshape((train_images.shape[0], -1))

test_images = test_images.reshape((test_images.shape[0], -1))

with nengo.Network(seed=0) as net:

net.config[nengo.Ensemble].max_rates = nengo.dists.Choice([max_rate])

net.config[nengo.Ensemble].intercepts = nengo.dists.Choice([0])

net.config[nengo.Connection].synapse = None

neuron_type = nengo.LIFRate(amplitude=0.01)

nengo_dl.configure_settings(stateful=False)

inp = nengo.Node(np.zeros(28 * 28))

x1 = nengo_dl.Layer(tf.keras.layers.Conv2D(filters=32, kernel_size=3))(

inp, shape_in=(28, 28, 1)

)

x1 = nengo_dl.Layer(neuron_type)(x1)

conv1_p = nengo.Probe(x1, label="conv1")

x2 = nengo_dl.Layer(tf.keras.layers.Conv2D(filters=64, strides=2, kernel_size=3))(

x1, shape_in=(26, 26, 32)

)

x2 = nengo_dl.Layer(neuron_type)(x2)

conv2_p = nengo.Probe(x2, label="conv2")

x3 = nengo_dl.Layer(tf.keras.layers.Conv2D(filters=128, strides=2, kernel_size=3))(

x2, shape_in=(12, 12, 64)

)

x3 = nengo_dl.Layer(neuron_type)(x3)

conv3_p = nengo.Probe(x3, label="conv3")

out = nengo_dl.Layer(tf.keras.layers.Dense(units=10))(x3)

out_p = nengo.Probe(out, label="out_p")

out_p_filt = nengo.Probe(out, synapse=0.01, label="out_p_filt")

minibatch_size = 200

sim = nengo_dl.Simulator(net, minibatch_size=minibatch_size)

train_images = train_images[:, None, :]

train_labels = train_labels[:, None, None]

test_images = np.tile(test_images[:, None, :], (1, n_steps, 1))

test_labels = np.tile(test_labels[:, None, None], (1, n_steps, 1))

def classification_accuracy(y_true, y_pred):

return tf.metrics.sparse_categorical_accuracy(y_true[:, -1], y_pred[:, -1])

sim.compile(

optimizer=tf.optimizers.RMSprop(0.001),

loss={

out_p: tf.losses.SparseCategoricalCrossentropy(from_logits=True),

conv1_p: tf.losses.mse,

conv2_p: tf.losses.mse,

conv3_p: tf.losses.mse,

},

loss_weights={out_p: 1, conv1_p: 1e-3, conv2_p: 1e-3, conv3_p: 1e-3},

)

sim.fit(

train_images,

{

out_p: train_labels,

conv1_p: np.ones((train_labels.shape[0], 1, conv1_p.size_in)) * target_rate,

conv2_p: np.ones((train_labels.shape[0], 1, conv2_p.size_in)) * target_rate,

conv3_p: np.ones((train_labels.shape[0], 1, conv3_p.size_in)) * target_rate,

},

epochs=10,

)

# sim.save_params("./params/mnist_params1_Rt50_St50")

sim.compile(loss={out_p_filt: classification_accuracy})

print(

"Accuracy after training:",

sim.evaluate(test_images, {out_p_filt: test_labels}, verbose=0)["loss"],

)

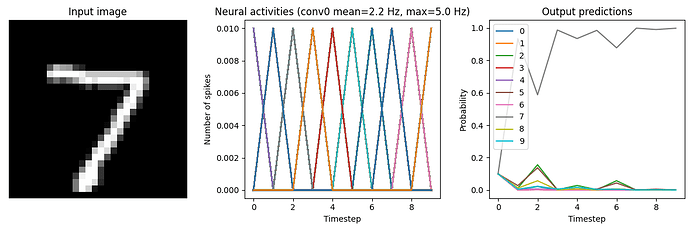

data = sim.predict(test_images[:minibatch_size])

Regards,

Ayesha Siddique