This paper gives a quick introduction to some of these concepts, which might be helpful http://compneuro.uwaterloo.ca/files/publications/stewart.2012d.pdf. I’ll give some brief answers here, but I’d definitely recommend reading the paper as it explains things in more detail.

- What is an encoder in terms of neural networks?

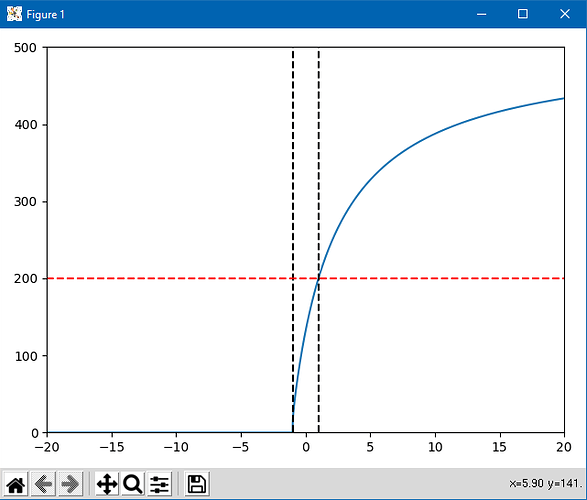

You can think of the encoders as representing the preferred stimulus of a neuron (also sometimes referred to as a “tuning curve” in neuroscience). It represents the input stimulus that will cause that neuron to respond most strongly. In practical terms at the neural network level, you can just think of these as part of the connection weights on a given connection.

- What do the parameters ‘intercepts’ and ‘max rates’ actually do?

These control the gain and bias on the neurons. The intercept is the input value at which that neuron will begin to respond, and the max rate is the magnitude of the neuron’s response when the input value is equal to the max value (as defined by the radius parameter, discussed next). Given a neuron model (e.g. LIF or rectified linear), if you have a desired intercept and max rate you can calculate what the corresponding gain and bias of the neuron should be. And then it is the gain and bias that are actually being simulated when the network runs.

- What is the ‘radius’ of a neural ensemble?

Mathematically, the radius is used in the intercept/max rate calculations, as described above. Practically, the radius defines the expected magnitude of the inputs to that ensemble. E.g., if I expect the inputs to an ensemble to have magnitude in the range (-1, 1), then I would set the radius to 1. If I expect the inputs in the range (-2, 2), I would set the radius to 2. This isn’t a hard limit, you can still simulate the network with inputs outside the expected range. But the performance will probably not be as good for inputs outside the range.

- Does connecting objects in Nengo simulate the behavior of a synapse if so then what is the point of the ‘synapse=’ parameter & what does the number afterwards do?

Yes, connecting objects includes a simulation of synaptic behaviour. The default synapse is a Lowpass filter, and the number is the time constant of that filter. Or you could use any of the other synapse models in nengo.synapses.

- Why are we allowed to perform functions over a connection? What concept in neural networks is this related to? My understanding was the synapse just connected neurons and held the weight.

The function you specify on a connection is defining what function you would like that connection to perform. Nengo will then automatically solve for a set of connection weights that best approximate that function. So in the end the actual network just contains connection weights and synapses, as you expect.

- What is the transform parameter do to the connection?

This just applies a linear transformation to the output of the function we talked about above. Or, in the case of non-decoded connections (e.g., directly connecting to ensemble.neurons objects), this defines the connection weight matrix. In either case, again you just end up with a set of connection weights connecting the two ensembles, the transform is a mathematical abstraction.

- What is a “dimension” of an ensemble? I understand the fact that it allows you to store an additional real number in the ensemble and that the real number must be stored over many neurons for precision and to average out the noise, but is there a deeper reason?

I think this is best explained in the paper above, hopefully this will make more sense after reading that.