Righto. I can see your confusion, the equation derivation is a little more nuanced than just the equations for J and a(x). Btw, our forums support \LaTeX. Simply encapsulate a \LaTeX formula within $, like so:

$\LaTeX$

Back to the explanation!

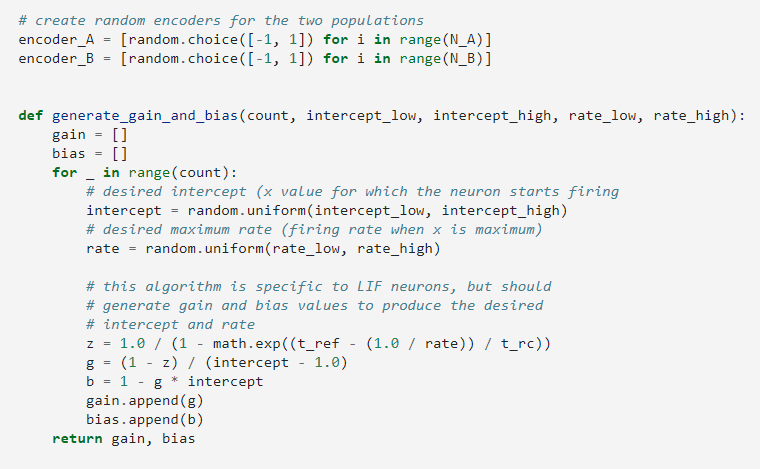

So, as I mentioned before, there are two equations that map the neuron gain (\alpha) and neuron bias current (J_{bias}) to the firing rate (a) of an LIF neuron. We have:

J(x) = \alpha x + J_{bias} (Eq. 1)

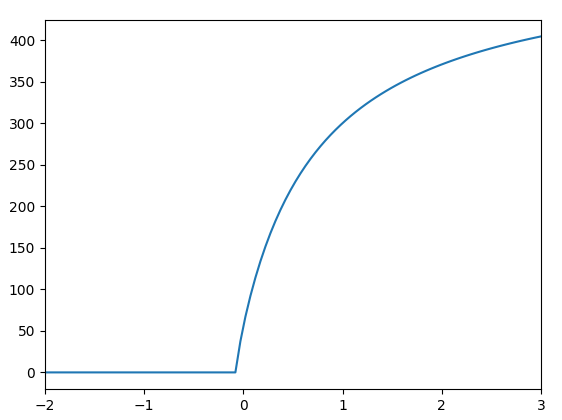

which converts an input value x to the neuron input current J(x). And we also have the LIF activation function:

a(x) = \frac{1}{\tau^{ref} - \tau^{RC}ln\left(1 - \frac{J_{th}}{J(x)}\right)}

We can re-arrange the activation function to solve for J(x) like so:

J(x) = \frac{J_{th}}{1 - e^{\left(\frac{\tau^{ref} - \frac{1}{a(x)}}{\tau^{RC}}\right)}}

If you look at the code, this is what z is computing. Before continuing, I would like to clarify out that the following statement is slightly incorrect:

Rather, z computes the input current for the neuron given any value of the rate a(x) (doesn’t have to be the maximum rate).

With the two equations for J(x), we notice that without additional information, they are insufficient to actually solve for \alpha and J_{bias}. So… how do we actually do this? As with solving for m (slope) and c (constant) for an equation of a straight line, we need two known fixed points!

The First Point

The first known point is for the intercept value. When x = intercept, by definition, the firing rate of the neuron is 0. This doesn’t help much because substituting a(x_{intcpt}) = 0 into J(x) causes the equation to blow up (we have a division by 0 in the equation). But, by definition, when the neuron is at that firing threshold, we know that the input current to the neuron J(x) must be equal to the threshold firing current J_{th} of that neuron. Using this fact, and Eq. 1, we can then write:

J_{th} = \alpha x_{intcpt} + J_{bias}

In Nengo, we set J_{th} = 1 (it’s arbitrary, and a simple value to work with), so,

1 = \alpha x_{intcpt} + J_{bias} (Eq. 2)

The Second Point

The second fixed point is the “maximum” firing rate of the neuron. I must clarify that there are two “maximum” rates for the neurons in the NEF. The “true” maximum is basically how fast the neuron will fire given an infinite input current. This is basically 1 / \tau^{ref} (i.e., producing a spike the instant the refactory time is up). This value is not helpful to us in this derivation, so that’s all I’ll say about it for now.

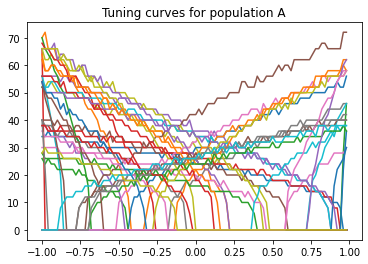

The other “maximum” value is a definition of how fast we want the neuron to fire at some maximal x value. In the NEF, the input values for x are assumed to be within -1 to 1, and hence, the “maximum” firing rate of the neuron is defined to be when the input x = 1. With this, we can re-write Eq. 1 as:

J(1) = \alpha + J_{bias} (Eq. 3)

Solving for \alpha and J_{bias}

With Eq. 2 and Eq. 3, we can now solve for \alpha and J_{bias} by doing a bit of algebra. First, we rearrange Eq. 3 to solve for J_{bias}:

J_{bias} = J(1) - \alpha (Eq. 4)

And we substitute into Eq. 2 to solve for \alpha:

1 = \alpha x_{intcpt} + J(1) - \alpha

1 - J(1) = \alpha(x_{intctp} - 1)

\alpha = \frac{1 - J(1)}{x_{intctp} - 1}

If you look at the code, this is what g is computing.

And once we have \alpha, we can solve for J_{bias} by substituting into Eq. 2:

1 = \alpha x_{intcpt} + J_{bias}

J_{bias} = 1 - \alpha x_{intcpt}

And if you look at the code, this is what b computes.

Afternotes

I should note that this method for solving for \alpha and J_{bias} is applicable to other neuron types (i.e., not LIF neurons) as well. All you would have to do is replace the current equation J(x) with the current equation for the other neuron type.