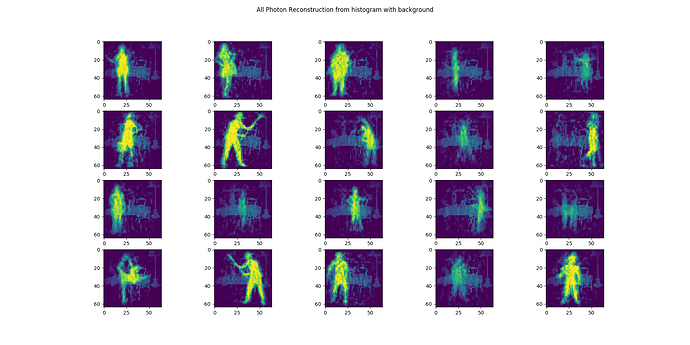

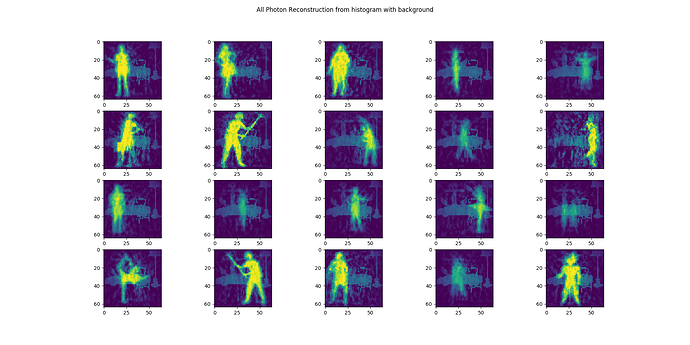

Hey, just a quick question, I seem to get different results with the ensembles and dl models that I think are similar. I mean I’m not 100% sure if they are identical, I followed the advice on a previous forum post - Need to download weights of fully connected network - below is the code and output images.

I was also looking at how to improve my model (i plan to transition into a cnn based model later, so just like stuff in nengo i haven’t utilised in the models shown) and/or move from SReLU to LIF but the performance was awful in comparison. i looked at the studywolf blog, but since it is using the converter, i am unsure how to replicate the “changing of the gains” value within the networks i have made.

Also just to ask all the questions, if I am looking to parse the data to feed it in sequentially, as it is histogram data i want it to retain the previous input and use the new input to help get a better results, should i look toward the LMUs to help retain this information?

with nengo.Network(seed=0) as net:

net.config[nengo.Ensemble].max_rates = nengo.dists.Choice([1000])

net.config[nengo.Ensemble].intercepts = nengo.dists.Choice([0])

net.config[nengo.Connection].synapse = None

neuron_type = nengo.SpikingRectifiedLinear(amplitude=0.001)

nengo_dl.configure_settings(stateful=False)

inp = nengo.Node(np.zeros(7999))

hidden = nengo_dl.Layer(

tf.keras.layers.Dense(units=1024, activation=tf.nn.relu))(inp)

hidden = nengo_dl.Layer(neuron_type)(hidden)

hidden = nengo_dl.Layer(

tf.keras.layers.Dense(units=512, activation=tf.nn.relu))(hidden)

hidden = nengo_dl.Layer(neuron_type)(hidden)

hidden = nengo_dl.Layer(

tf.keras.layers.Dense(units=256, activation=tf.nn.relu))(hidden)

hidden = nengo_dl.Layer(neuron_type)(hidden)

hidden = nengo_dl.Layer(

tf.keras.layers.Dense(units=4096, activation=tf.nn.relu))(hidden)

out = nengo_dl.Layer(tf.keras.layers.Dense(units=4096))(hidden)

out_p = nengo.Probe(out, label="out_p")

out_p_filt = nengo.Probe(out, synapse=0.01, label="out_p_filt")

one set of images is slightly more refined than the other like I am taking an extra step or smoothing the spikes somewhere

with nengo.Network(seed=0) as net:

net.config[nengo.Ensemble].max_rates = nengo.dists.Choice([1000])

net.config[nengo.Ensemble].intercepts = nengo.dists.Choice([0])

net.config[nengo.Connection].synapse = None

neuron_type = nengo.SpikingRectifiedLinear(amplitude=0.001)

nengo_dl.configure_settings(stateful=False)

inp = nengo.Node(np.zeros(7999))

hidden = nengo_dl.Layer(

tf.keras.layers.Dense(units=1024, activation=tf.nn.relu))(inp)

hidden = nengo_dl.Layer(neuron_type)(hidden)

hidden = nengo_dl.Layer(

tf.keras.layers.Dense(units=512, activation=tf.nn.relu))(hidden)

hidden = nengo_dl.Layer(neuron_type)(hidden)

hidden = nengo_dl.Layer(

tf.keras.layers.Dense(units=256, activation=tf.nn.relu))(hidden)

hidden = nengo_dl.Layer(neuron_type)(hidden)

hidden = nengo_dl.Layer(

tf.keras.layers.Dense(units=4096, activation=tf.nn.relu))(hidden)

out = nengo_dl.Layer(tf.keras.layers.Dense(units=4096))(hidden)

out_p = nengo.Probe(out, label="out_p")

out_p_filt = nengo.Probe(out, synapse=0.01, label="out_p_filt")