Hi @Adam_Antios, and welcome back to the Nengo forums!

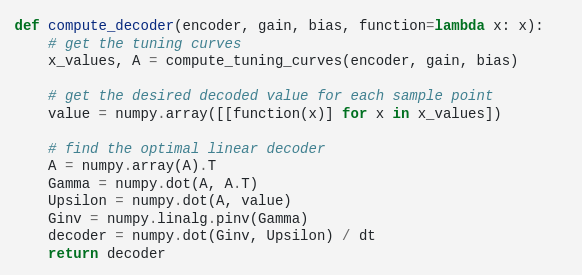

The integration operation $<\cdot{}>_x$ is a real sneaky one, and is actually hiding in plain sight in the code – being computed by the numpy.dot() function. The numpy.dot() function computes a vector inner product, and to see how this computes the integration, I’ll go through the computation in detail below.

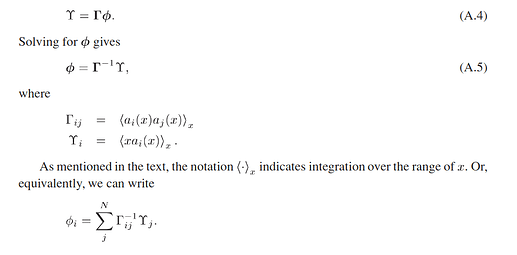

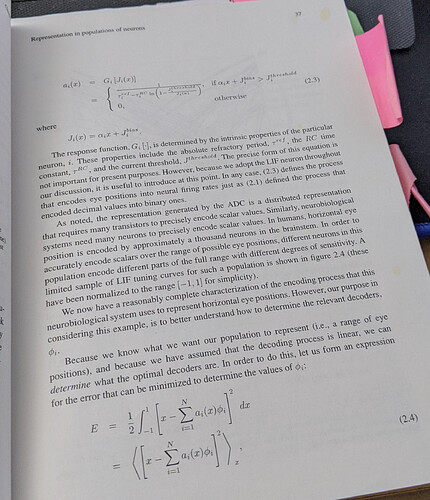

In the Neural Engineering book, $<\cdot{}>_x$ denotes the operation to compute the mean (i.e., integrate) over the range of $x$. Thus, if $x = [x_1, x_2, x_3]$, $< x >_x = (x_1 + x_2 + x_3) / 3$.

I’ll move to the Upsilon calculation next, because it’s easier (fewer things to type out) than the Gamma matrix, but you can do the same thing for the Gamma matrix to prove to yourself that the numpy.dot function is performing the integration. Moving forward, in this example, lets suppose that $x$ consists of two values: $x_1$, and $x_2$. Let us also have just 3 neurons, which gives us 3 activity values: $a_1$, $a_2$, $a_3$. Since we are evaluating $x$ at two points, each of these activity values will be a vector with two elements, e.g., $a_1 = [a_1(x_1), a_1(x_2)]$.

Thus, for neuron 1, $<xa_1(x)>_x = 0.5(x_1a_1(x_1) + x_2a_1(x_2))$. Similarly:

for neuron 2, $<xa_2(x)>_x = 0.5(x_1a_2(x_1) + x_2a_2(x_2))$ and

for neuron 3, $<xa_3(x)>_x = 0.5(x_1a_3(x_1) + x_2a_3(x_2))$.

The Upsilon matrix is then constructed as:

$$

\begin{gather}

\begin{bmatrix}

<xa_1(x)>_x \\

<xa_2(x)>_x \\

<xa_3(x)>_x

\end{bmatrix} =

\begin{bmatrix}

x_1a_1(x_1) + x_2a_1(x_2) \\

x_1a_2(x_1) + x_2a_2(x_2) \\

x_1a_3(x_1) + x_2a_3(x_2)

\end{bmatrix}0.5

\end{gather}

$$

We can factorize the non-scalar part of the Upsilon matrix out like this:

$$

\begin{gather}

\begin{bmatrix}

x_1a_1(x_1) + x_2a_1(x_2) \\

x_1a_2(x_1) + x_2a_2(x_2) \\

x_1a_3(x_1) + x_2a_3(x_2)

\end{bmatrix} =

\begin{bmatrix}

a_1(x_1) & a_1(x_2) \\

a_2(x_1) & a_2(x_2) \\

a_3(x_1) & a_3(x_2)

\end{bmatrix}

\begin{bmatrix}

x_1 \\

x_2

\end{bmatrix}

\end{gather}

$$

This almost looks like the dot product operation, without the extra $0.5$ scalar term. However, because we know that $\phi = \Gamma^{-1}\Upsilon$, and both are “integrated” over $x$, the scalar term cancels out ($1/0.5$ for $\Gamma^{-1}$ multiplied by $0.5$ for $\Upsilon$), so I’m going to ignore the scalar term from now on.

To make the matrix multiplication more visually intuitive, I like to reorganize the matrix multiplication like so:

$$

\Upsilon = \mathbf{A} \cdot \mathbf{x} =

\begin{matrix}

&

\begin{bmatrix}

x_1 \\

x_2

\end{bmatrix} \\

\begin{bmatrix}

a_1(x_1) & a_1(x_2) \\

a_2(x_1) & a_2(x_2) \\

a_3(x_1) & a_3(x_2)

\end{bmatrix} &

\begin{bmatrix}

x_1a_1(x_1) + x_2a_1(x_2) \\

x_1a_2(x_1) + x_2a_2(x_2) \\

x_1a_3(x_1) + x_2a_3(x_2)

\end{bmatrix}

\end{matrix}

$$

I.e., Upsilon = numpy.dot(A, x). As I mentioned before, you can perform the same calculation for the $\Gamma$ matrix, and arrive at the same conclusion.

I hope this clarifies things!