Hi @Choozi,

There are two ways of multiplying a vector with a matrix in Nengo, and the different methods depend on how the matrix is being populated. Multiplying by a constant matrix is a pretty straightforward implementation, without actually needing any neurons to implement. In this case, you can perform the matrix multiplication by using the transform parameter on a nengo.Connection.

Here’s a simple example of multiplying a vector by the matrix:

[[0, 1, 0, 0]

[0, 0, 1, 0]

[0, 0, 0, 1]

[1, 0, 0, 0]]

And here’s the Nengo code:

import nengo

import numpy as np

with nengo.Network() as model:

inp = nengo.Node([1, 2, 3, 4])

out = nengo.Node(size_in=4)

matrix = np.matrix([[0, 1, 0, 0], [0, 0, 1, 0], [0, 0, 0, 1], [1, 0, 0, 0]])

nengo.Connection(inp, out, transform=matrix, synapse=None)

p_out = nengo.Probe(out)

with nengo.Simulator(model) as sim:

sim.run(0.01)

print(sim.data[p_out][-1])

If you run the code above, you should get the output:

[2. 3. 4. 1.]

which is the correct result if you multiply the vector [1, 2, 3, 4] with the matrix, demonstrating that you can use the transform parameter of the nengo.Connection object to do the matrix multiplication for you. But, as noted before, for this method to work, you cannot change the matrix once the network is created.

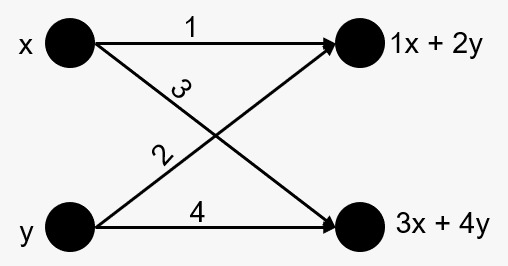

I should note, however, that the method above doesn’t make any assumptions about the complex / real nature of the matrix elements. Rather, it just assumes that all of the matrix elements are just numbers, and it is up to the Nengo user to interpret the result however they want to. If you want to do matrix multiplication with complex numbers (or vectors containing complex values), then I’d suggest splitting the vector and matrices in their respective real and complex components, and doing each matrix multiplication separately. I.e., you’ll have 4 connections, one to do each of the following multiplications:

- real x real

- real x complex

- complex x real

- complex x complex

You’ll need to sign the inputs appropriately to make sure that the math works out though (e.g., complex x complex needs a -1 factor).

Side note: we make use of both the transform method to do matrix multiplication, and dealing with real & complex numbers in our implementation of the Circular Convolution operator. This operator is available as a built-in network in Nengo.