Hello! I’ve been doing some work involving SNNs and came across Nengo as a tool to implement them. So far it has been the best simulator that I have found so I have been learning to use it the past couple of days.

I’ve been trying to implement a convolutional autoencoder like the one seen here: DataTechNotes: Convolutional Autoencoder Example with Keras in Python but I am having some trouble. I’m able to convert the keras model using the converter get the same results as in keras but my network has some issues when it is converted to a spiking network.

Here is the network I am converting (max pooling was replaced with conv2d with stride of 2 to make up for the fact that there is no max pooling in nengo):

input_img = Input(shape=(28, 28, 1))

conv1 = Conv2D(12, (3, 3), activation=tf.nn.relu, padding='same')(input_img)

max1_conv = Conv2D(12, (3, 3), strides=2, activation=tf.nn.relu, padding='same')(conv1)

conv2 = Conv2D(8, (3, 3), activation=tf.nn.relu, padding='same')(max1_conv)

encoded = Conv2D(8, (3, 3), strides=2, activation=tf.nn.relu, padding='same')(conv2)

conv3 = Conv2D(8, (3, 3), activation=tf.nn.relu, padding='same')(encoded)

up1 = UpSampling2D((2, 2))(conv3)

conv4 = Conv2D(12, (3, 3), activation=tf.nn.relu, padding='same')(up1)

up2 = UpSampling2D((2, 2))(conv4)

decoded = Conv2D(1, (3, 3), activation=tf.nn.sigmoid, padding='same')(up2)

autoencoder = Model(input_img, decoded)

Then I follow the keras to snn example to convert and train the network:

converter = nengo_dl.Converter(autoencoder)

with nengo_dl.Simulator(converter.net, minibatch_size=200, seed=0) as sim:

sim.compile(

optimizer='rmsprop',

loss={nengo_output: tf.losses.binary_crossentropy},

metrics=tf.metrics.binary_accuracy,

)

sim.fit(

{converter.inputs[input_img]: train_images},

{converter.outputs[decoded]: train_images},

validation_data=(

{converter.inputs[input_img]: test_images},

{converter.outputs[decoded]: test_images}

),

),

sim.save_params('./AE_params')

Finally, I use the run_network function like in the example to run the spiking network:

def run_network(

activation,

params_file='AE_params',

n_steps=30,

scale=1,

synapse=None,

n_test=400,

):

# convert keras model to nengo network

nengo_converter = nengo_dl.Converter(

autoencoder,

swap_activations={tf.nn.relu: activation},

scale_firing_rates=scale, synapse=synapse,

)

net = nengo_converter.net

# get i/o objects

nengo_input = nengo_converter.inputs[input_img]

nengo_output = nengo_converter.outputs[decoded]

# repeat inputs for num timesteps

tiled_test_images = np.tile(test_images[:n_test], (1, n_steps, 1))

# options to speed sim

with nengo_converter.net:

nengo_dl.configure_settings(stateful=False)

# build network, load weights, run inference on test images

with nengo_dl.Simulator(net, minibatch_size=50, seed=0) as nengo_sim:

nengo_sim.load_params(params_file)

data = nengo_sim.predict({nengo_input: tiled_test_images})

# plot images comparison

imgs = data[nengo_output]

n = 5

for i in range(n):

ax = plt.subplot(2, n, i+1)

plt.imshow(np.reshape(test_images[i], (28, 28)))

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

ax = plt.subplot(2, n, n+i+1)

plt.imshow(imgs[i, n_steps-1].reshape((28, 28)))

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

plt.show()

run_network(activation=nengo.SpikingRectifiedLinear(), synapse=0.001)

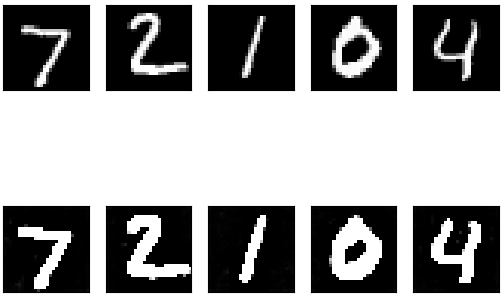

Once everything is finished, I’m left with this output:

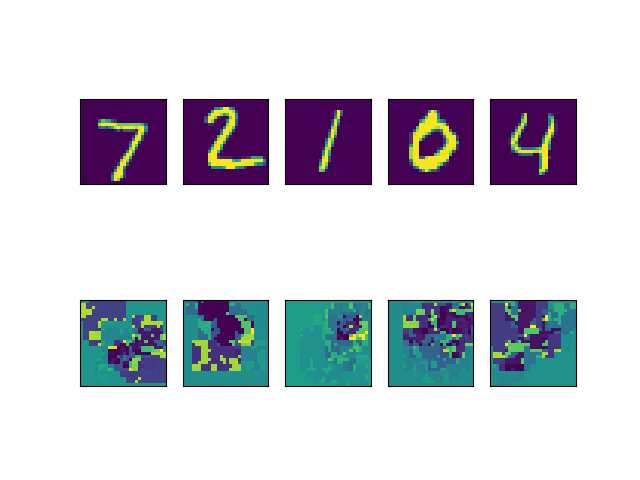

I’ve tried optimizing the network like in the examples by synaptic smoothing, fire rate scaling, and fire rate regularization but each of them results in something that looks like this.

Does anyone have any suggestions on how I would be able to properly convert the convolutional autoencoder into its spiking equivalent?