You’ll want hidden_to_memory = True in order to get the h_{t-1} -> u_t connection that you see in the architecture diagram. You’ll also want input_to_hidden = True in order to get the x_t -> Nonlinear connection in the architecture.

Also note that the LMU allows passing in an arbitrary hidden_cell, and so you can make this a dense layer or similar to avoid the connection from hidden to hidden.

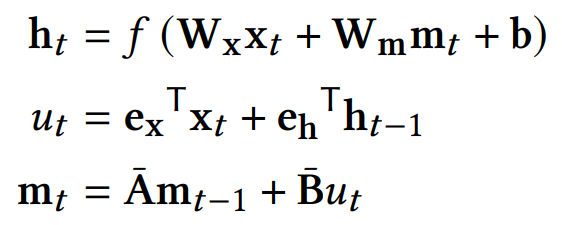

I took another look at the paper and this part of the description:

we have removed the connection from the nonlinear to the linear layer

is actually incorrect, as we have hidden_to_memory = True. The equations from the paper (below) and the architecture diagram are correct. Thanks for bringing this to our attention.