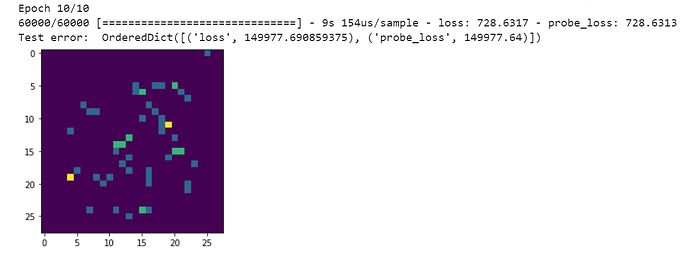

To get started working with spiking autoencoders, I’ve been trying to follow the spiking mnist example as well as other examples for Nengo and NengoDL by making a simple autoencoder for producing the MNIST digits. I’ve set up my network and I believe it is being trained properly, since the values for the training loss look right. It works with regular neurons, but I’m having issues with evaluation when I switch to spiking neurons.

I’m confused about how the evaluation works for a spiking autoencoder model. I changed my test data to repeat for a number of time steps. I thought it would be as straightforward as simply calling sim.evaluate(test_data, test_data) since my target is the same as the input, but this doesn’t seem to work. I then try to display a sample picture by following the same approach as the non-spiking autoencoder, i.e.

output = sim.predict(test_data[:minibatch_size])

plt.imshow(output[p_c][29].reshape((28, 28)))

When I do that, I get the following error: ValueError: cannot reshape array of size 23520 into shape (28,28). So… something is definitely very wrong. What I notice here is that I’m using 30 time steps, and this array size of 23520 = 784*30, so I must not be collecting the right data to reshape and display.

I get that I want to evaluate at the final time step of the simulation since it’s a spiking network, but I’m not quite sure how to do that and display a sample reconstruction. I’m a bit unable to follow the explanation in the spiking mnist example and would appreciate a bit more clarity on how exactly we go about setting up the evaluation with spiking neurons given the added temporal factor. Thank you!