Hi @toddswri,

Getting NengoOCL to work alongside NengoDL and NengoGUI is possible, and here are the installation instructions to do so. I’m going to start the instructions by creating a new environment because I don’t know what state your existing environment is in (so the easiest is to start with a blank slate):

Create a new Conda environment

Create a new Conda environment (replace <env_name> with a name you like). Since you were using Python 3.7, I’ve replicated it here:

conda create -n <env_name> python=3.7

conda activate <env_name>

Install the various GPU libraries

conda install cudatoolkit

conda install -c conda-forge cudnn pyopencl

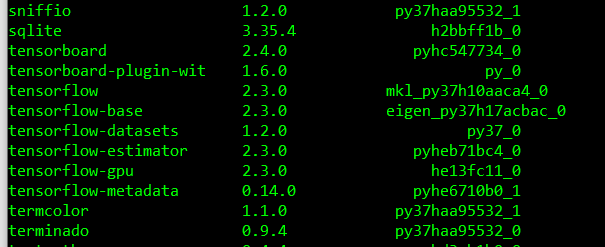

Along with some other packages, the following versions for cudatoolkit, cudnn and pyopencl were installed for me:

cudatoolkit conda-forge/win-64::cudatoolkit-11.2.2-h933977f_8

cudnn conda-forge/win-64::cudnn-8.1.0.77-h3e0f4f4_0

pyopencl conda-forge/win-64::pyopencl-2021.1.6-py37hb605e8c_0

Install TensorFlow

Note the use of pip instead of conda here!

pip install tensorflow

You should see it install v2.4.1, and a whole bunch of other packages:

Collecting tensorflow

Using cached tensorflow-2.4.1-cp37-cp37m-win_amd64.whl (370.7 MB)

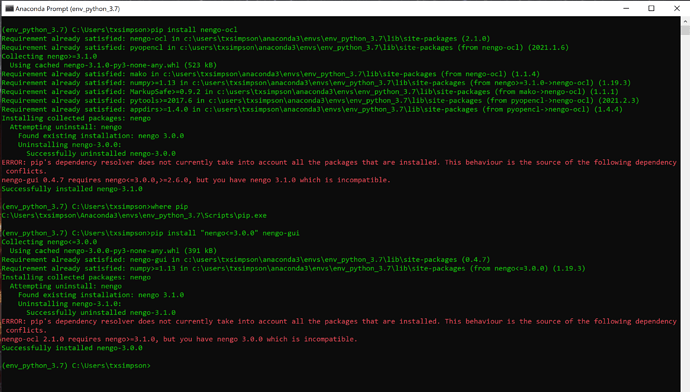

Install Nengo and related Nengo packages

You can now install Nengo and such. The order doesn’t matter, but note that the NengOCL version has been fixed to 2.0.0.

pip install nengo nengo-gui nengo-dl "nengo-ocl==2.0.0"

For my environment, it installed these versions (which should all work together). You might see two listings for nengo, but the second one should be v3.0.0.

Collecting nengo-dl

Using cached nengo_dl-3.4.0-py3-none-any.whl

Collecting nengo-gui

Using cached nengo_gui-0.4.7-py3-none-any.whl (843 kB)

Collecting nengo-ocl==2.0.0

Using cached nengo_ocl-2.0.0-py3-none-any.whl (77 kB)

Collecting nengo

Using cached nengo-3.0.0-py3-none-any.whl (391 kB)

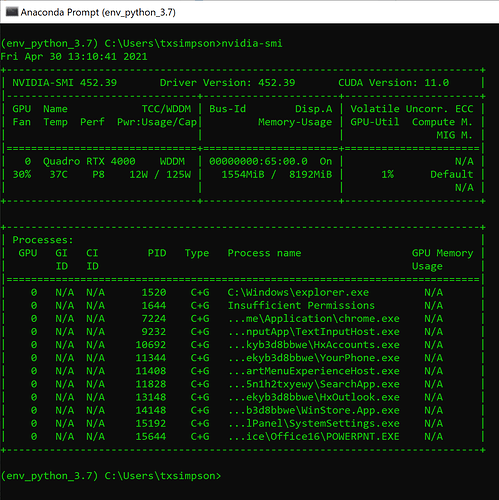

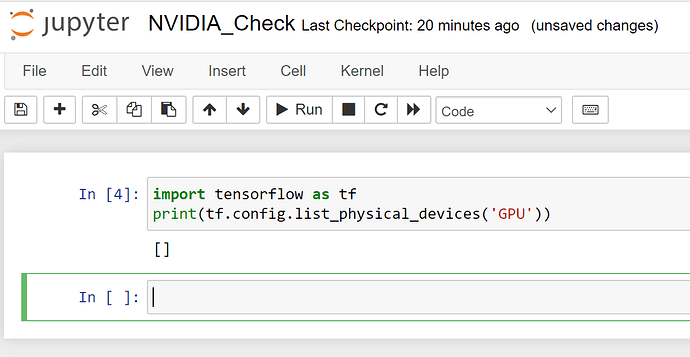

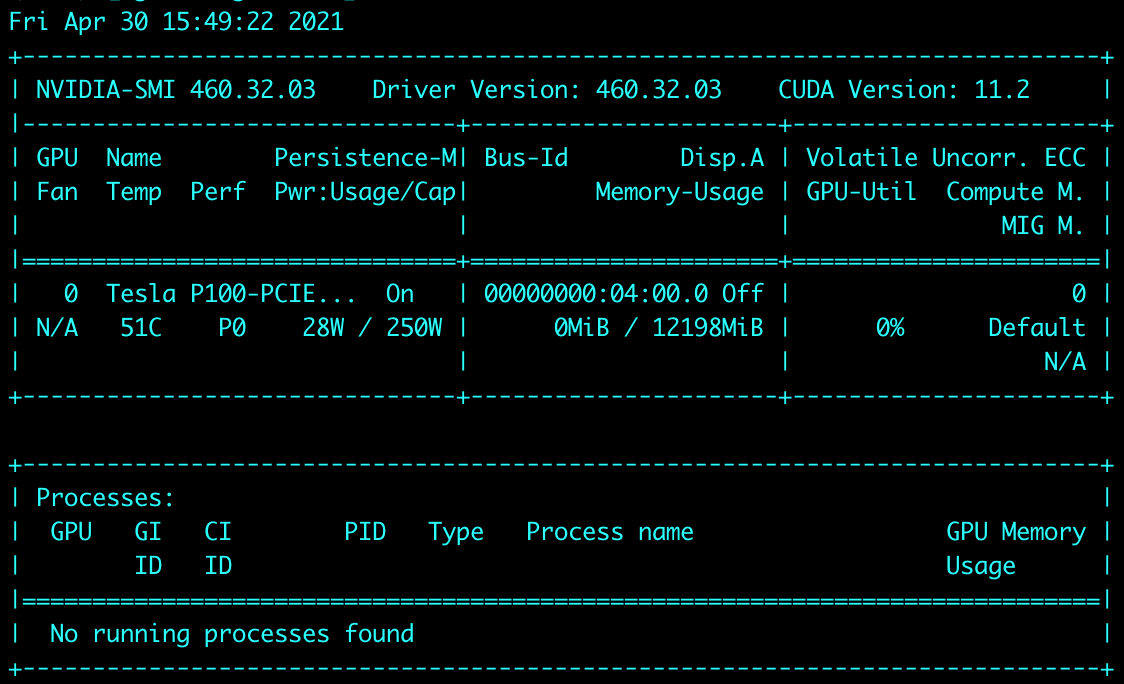

Testing the GPU

You can now proceed to test the GPU in Python. For TensorFlow (as before) do:

import tensorflow as tf

print(tf.config.list_physical_devices("GPU"))

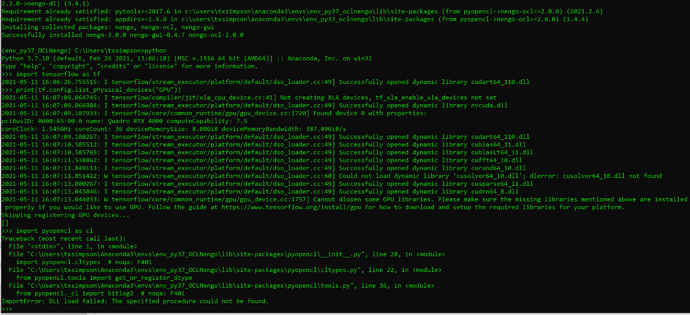

For PyOpenCL (which NengoOCL uses), do:

import pyopencl as cl

print(cl.get_platforms())

You should see a list of available platforms to use. Sometimes it detects your CPU as an available platform to run OCL code on, so it will show up in this list. For my machine, only one entry shows up in the list:

[<pyopencl.Platform 'NVIDIA CUDA' at 0x19b412798c0>]

Next, you can print the available devices for the platform you want to use. For my machine, since there is only one available OCL platform, I use the list index 0, but your machine might be different:

print(cl.get_platforms()[0].get_devices())

With that, I see this:

[<pyopencl.Device 'NVIDIA GeForce RTX 3090' on 'NVIDIA CUDA' at 0x19b412799b0>]

indicating I have an RTX 3090 visible to PyOpenCL to use.