I am curious whether anyone has successfully implemented a class-activation mapping style output for nengo-dl. I’m currently trying to do it through a GlobalAveragePooling2D layer, but am not sure whether it’s possible to extract the layer weights. It would be interesting to map the activations across timesteps. Is such an approach even possible with Nengo-dl?

You can definitely extract whatever layer weights you need from nengo-dl. It will depend on how you are implementing the layers, but for example if you have nengo.Connection(layer0.neurons, layer1.neurons, ...), then you can get the weights via

with nengo.Network() as net:

...

conn = nengo.Connection(layer0.neurons, layer1.neurons, ...)

with nengo_dl.Simulator(net) as sim:

...

print(sim.data[conn].weights)

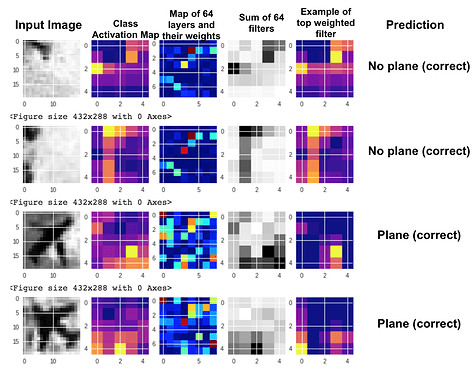

Interesting- I’ve extracted the layer weights for a GlobalAveragePooling2D layer, and the results don’t seem to be very similar to conventional CNNs. I’ve attached a couple screenshots that hopefully explain what I’m observing. The model can correctly classify the images, but the class activation maps don’t correspond with object locations.

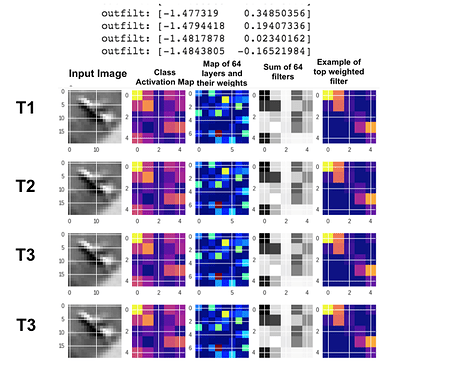

Additionally, and this has me a bit confused about Nengo-dl functionality- while the weights seem to change from image to image as seen above (what I’d expect), the weights don’t change from timestep to timestep within that image. The outfilt correctly shifts over time, but the weights themselves from the layer are consistent from timestep to timestep. Is that to be expected? (Disclaimer- I’m not a Nengo expert. But I have been enjoying incorporating it into my research!)

I’ve never worked with class activation maps so I’m not an expert, but from what I understand I wouldn’t expect nengo-dl CAMs to be any different than deep networks built in any other framework. So I suspect if you are seeing differences it’s an implementation quirk, rather than a theoretical difference. But again I’ve never looked at CAMs in Nengo, so that’s just guesswork on my part!

With respect to the weights changing over time, we would expect them to be constant. Unless you define an online learning rule (such as nengo.PES), which would be modifying the weights over time. But the sim.train weight modifications are static; they modify the weights during training, but when you’re just running inference later on the weights won’t be changing.