Dear all

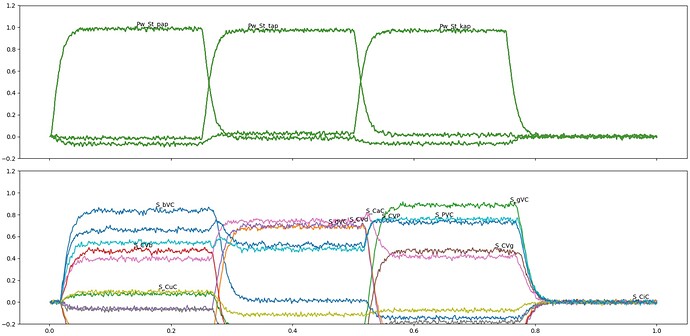

I have two state maps with different vocabs:

model.syll_phono = State(

subdimensions=subdims, neurons_per_dimension=n_neurons,

vocab=vocab_syll_phono)

with vocab:

vocab_syll_phono_keys = [‘Pw_St_pap’, ‘Pw_St_tap’, ‘Pw_St_kap’]

and

model.syll_score = State(

subdimensions=subdims, neurons_per_dimension=n_neurons,

vocab=vocab_syll_score)

with vocab:

syll_score_keys = [

# pap, tap, kap, …

‘S_bVC’, # 0

‘S_dVC’, # 1

‘S_gVC’, # 2

‘S_CVb’, # 3

‘S_CVd’, # 4

‘S_CVg’, # 5

‘S_CaC’, # 6

‘S_CiC’, # 7

‘S_CuC’, # 8

‘S_PVC’, # 9

‘S_CVP’, # 10

]

and a mapping between them:

mapping_syll_phono2score = {

# pap, tap, kap, …

‘Pw_St_pap’: ‘S_bVC’,

‘Pw_St_pap’: ‘S_CVb’,

‘Pw_St_pap’: ‘S_CaC’,

‘Pw_St_pap’: ‘S_PVC’,

‘Pw_St_pap’: ‘S_CVP’,

‘Pw_St_tap’: ‘S_dVC’,

‘Pw_St_tap’: ‘S_CVb’,

‘Pw_St_tap’: ‘S_CaC’,

‘Pw_St_tap’: ‘S_PVC’,

‘Pw_St_tap’: ‘S_CVP’,

‘Pw_St_kap’: ‘S_gVC’,

‘Pw_St_kap’: ‘S_CVb’,

‘Pw_St_kap’: ‘S_CaC’,

‘Pw_St_kap’: ‘S_PVC’,

‘Pw_St_kap’: ‘S_CVP’,

}

My problem: if I implement a association from syll_phono state map to syll_score state map by using:

assoc_mem_syll_phono2syll_score = ThresholdingAssocMem(

input_vocab=vocab_syll_phono, output_vocab=vocab_syll_score,

mapping = mapping_syll_phono2score, threshold=0.3, function=lambda x: x > 0.)

and

syll_phono >> assoc_mem_syll_phono2syll_score

assoc_mem_syll_phono2syll_score >> syll_score

then, I want to get ALL S-pointers in syll_score which are defined in the mapping for syllable /pap/, /tap/ or /kap/

… I will attach an (failing) py code example for this problem

Is it possible to have an additive overlay of these result S-pointers in syll_score state map?

Kind regards

Bernd

Kroeger_one2many.py (5.4 KB)